Contents

- 1 Workbench Architecture

- 1.1 Workbench Deployment Architecture

- 1.2 Workbench "stand-alone/single node" architecture with single Engage Data-Center

- 1.3 Workbench "Cluster" HA architecture with single Engage Data-Center

- 1.4 Workbench "Cluster" HA architecture with multi Engage Data-Center (no/limited Metric ingest)

- 1.5 Workbench "stand-alone/single node" architecture with multi Engage Data-Center

- 1.6 Workbench "Cluster" architecture with multi Engage Data-Center

- 1.7 Workbench Anomaly Detection (AD) with a Workbench "Cluster" HA architecture within a single Engage Data-Center

- 1.8 Workbench Anomaly Detection (AD) HA with a Workbench "Cluster" HA architecture within a multi Engage Data-Center

- 1.9 Workbench Data-Centers

- 1.10 Future Workbench 9.x Architectures/Footprints

- 1.11 Workbench Agent and Workbench Agent Remote

- 1.12 Workbench Version Alignment

Workbench Architecture

Example Workbench and Workbench Anomaly Detection (AD) architectures are detailed below:

- Workbench "stand-alone/single node" architecture with single Engage Data-Center

- Workbench "Cluster" HA architecture with single Engage Data-Center

- Workbench "Cluster" HA architecture with multi Engage Data-Center (no/limited Metric ingest)

- Workbench "stand-alone/single node" architecture with multi Engage Data-Center

- Workbench "Cluster" architecture with multi Engage Data-Center

- Workbench Anomaly Detection (AD) with a Workbench "Cluster" HA architecture within a single Engage Data-Center

- Workbench Anomaly Detection (AD) HA with a Workbench "Cluster" HA architecture within a multi Engage Data-Center

Workbench Deployment Architecture

Workbench integrates to the Genesys Engage platform, as such the following Genesys Engage Objects will be required and leveraged by Workbench:

| Component | Description/Comments |

|---|---|

| Genesys Engage Workbench Client application/object | enables Engage CME configured Users to log into Workbench |

| Genesys Engage Workbench IO (Server) application/object | enables integration from Workbench to the Engage CS, SCS and MS |

| Genesys Engage Configuration Server application/object | enables integration from Workbench to the Engage CS; authentication and Config Changes |

| Genesys Engage Solution Control Server application/object | enables integration from Workbench to the Engage SCS; Alarms to WB from SCS |

| Genesys Engage Message Server application/object | enables integration from Workbench to the Engage MS; Config change ChangedBy metadata |

| Genesys Engage SIP Server application/object (optional) | enables integration from Workbench to the Engage SIP Server enabling the Channel Monitoring feature |

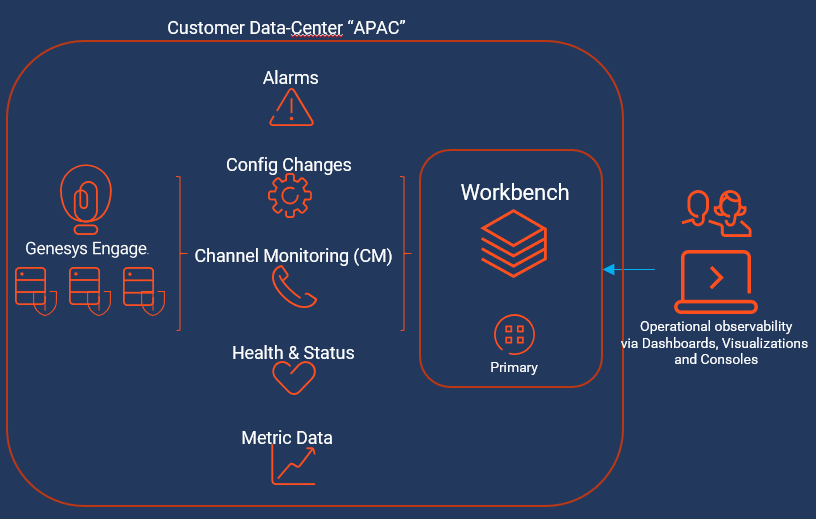

Workbench "stand-alone/single node" architecture with single Engage Data-Center

The example architecture below provides the following WB single Primary node within a single Data-Center approach:

- A Genesys Engage single Data-Center/Site (i.e. APAC) deployment

- Workbench integrates into the Engage Master Configuration Server (CS)

- Workbench integrates into the Engage Solution Control Server (SCS) and associated Message Server (MS)

- The Workbench Channel Monitoring feature functions via the WB IO application integrating to the respective Engage SIP Server

- Workbench Users connect to the Workbench Primary (WB IO application) instance and can visualize the features of WB

- If the Workbench Agent component is installed on any Genesys Application servers (i.e. SIP, URS, FWK etc)

- the Metric data from those hosts will be sent to the Workbench node for storage, providing visualizations via the Workbench Dashboard feature

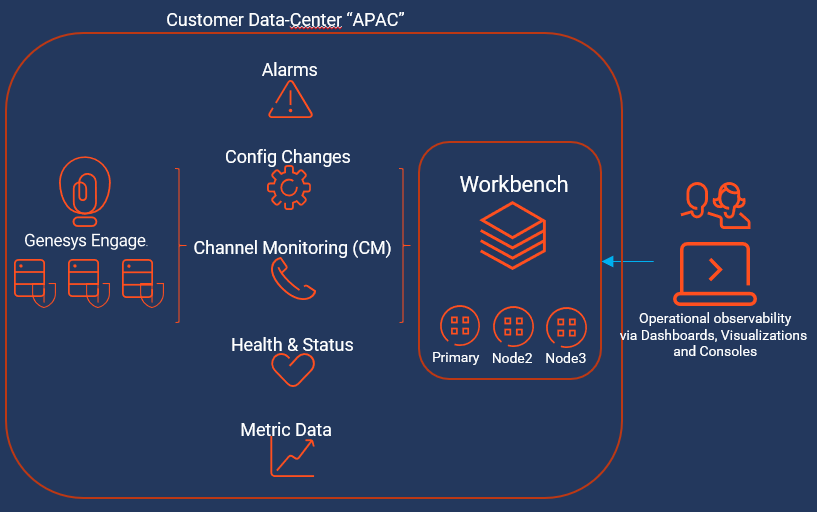

Workbench "Cluster" HA architecture with single Engage Data-Center

The example architecture below provides the following WB "Cluster" within a single Data-Center approach:

- A Genesys Engage single Data-Center/Site (i.e. APAC) deployment

- Workbench Primary node integrates into the Engage Master Configuration Server (CS)

- Workbench Primary node integrates into the Engage Solution Control Server (SCS) and associated Message Server (MS)

- The Workbench Channel Monitoring feature functions via the WB IO application integrating to the respective Engage SIP Server

- Workbench Users connect into the Workbench Primary (WB IO application) instance and can visualize the features of WB

- For HA resiliency, Workbench Node 2 contains event data (via Workbench Elasticsearch) and configuration data (via Workbench ZooKeeper)

- For HA resiliency, Workbench Node 3 contains event data (via Workbench Elasticsearch) and configuration data (via Workbench ZooKeeper)

- Workbench High-Availability (HA) is resiliency of event data (via Workbench Elasticsearch) and configuration data (via Workbench ZooKeeper)

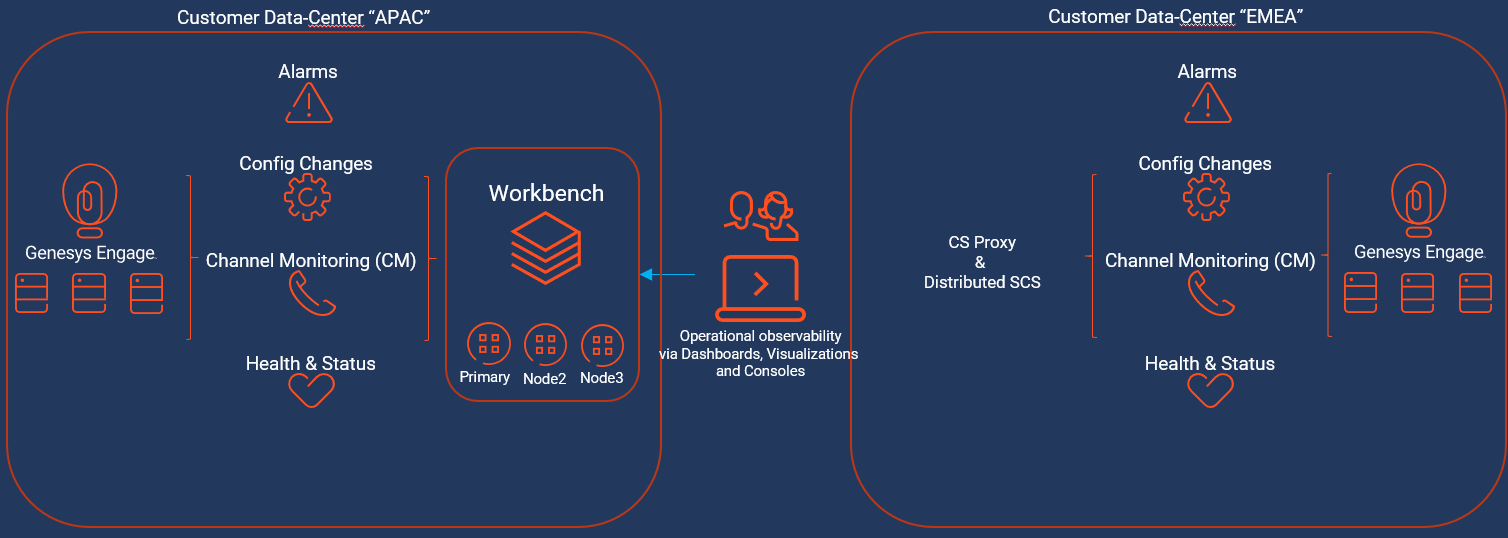

Workbench "Cluster" HA architecture with multi Engage Data-Center (no/limited Metric ingest)

- This architecture has no\limited Engage Metric data ingestion by design.

- This architecture is best suited for customers who do NOT wish to ingest Metric data from their Genesys Application Servers (i.e. SIP, URS, FWK etc) but wish to leverage the other features of Workbench via a minimal HA footprint

- The footprint could be reduced further by only deploying a Workbench Primary node at the APAC Data-Center, thereby providing no HA, but offers a minimal Workbench footprint investment.

The example architecture below provides the following WB Cluster within a multi Data-Center and no\limited Engage Metric data ingestion approach:

- A Genesys Engage multi Data-Center/Site (i.e. APAC & EMEA) deployment

- A Workbench Primary, Node 2 and Node 3 Cluster - only installed at the APAC Data-Center

- The Workbench Primary at the APAC Data-Center integrates into the respective local Configuration Server

- The Workbench Primary at the APAC Data-center integrates into the respective local Solution Control Server and associated Message Server

- The Workbench Channel Monitoring feature functions via the WB IO application integrating to the respective Engage SIP Server

- EMEA Alarms and Changes events would be ingested into the APAC Workbench Cluster via Engage CS Proxy and Distributed SCS components

- Workbench Users at both APAC and EMEA would connect to the APAC Workbench Primary (WB IO application) instance and can visualize the features of WB

- Workbench Agents would only be installed on the APAC Data-Center, on the Workbench Hosts by default

- Installing the Workbench Agent Remote component on the Genesys Application Servers in the APAC Data-Center is optional

- Workbench Agents would NOT be installed on the EMEA Data-Center - due to the network Metric event data that would transition over the WAN

- Workbench High-Availability (HA) is resiliency of event data (via Workbench Elasticsearch) and configuration data (via Workbench ZooKeeper)

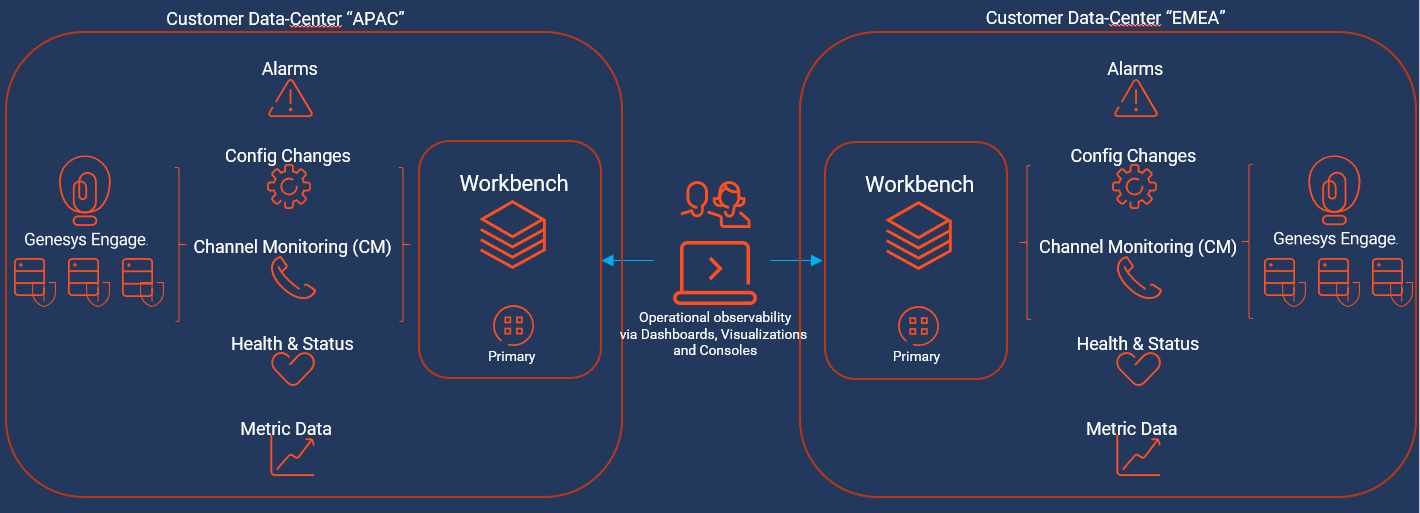

Workbench "stand-alone/single node" architecture with multi Engage Data-Center

The example architecture below provides the following WB single Primary node within a multi Data-Center approach:

- A Genesys Engage multi Data-Center/Site (i.e. APAC & EMEA) deployment

- Each Workbench Primary at each Data-Center integrates into the respective local Configuration Server

- Each Workbench Primary at each Data-center integrates into the respective local Solution Control Server and associated Message Server

- The Workbench Channel Monitoring feature functions via the WB IO application integrating to the respective Engage SIP Server

- Workbench Users would logically connect into their local Workbench Primary (WB IO application) instance and can visualize the features of WB

- Workbench Users can connect into either their local or remote Data-Center Workbench instances; this provides redundancy

- If the Workbench Agent component is installed on any Genesys Application servers (i.e. SIP, URS, FWK etc)

- the Metric data from those hosts will be sent to the local Workbench node/cluster for storage, providing visualizations via the Workbench Dashboard feature

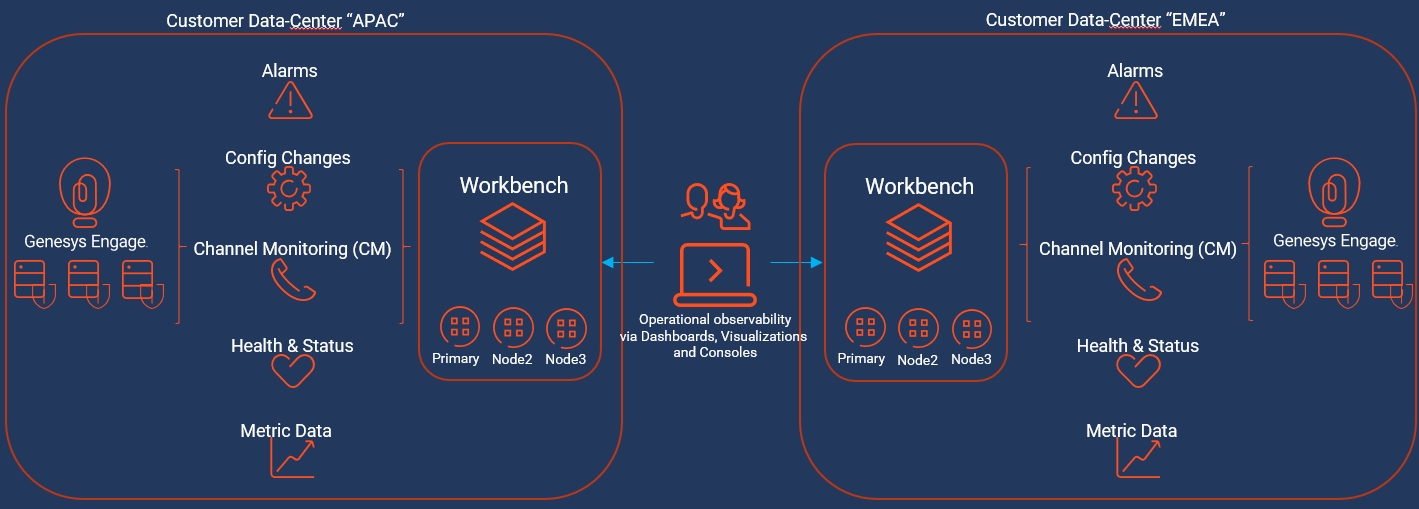

Workbench "Cluster" architecture with multi Engage Data-Center

The example architecture below provides the following WB Cluster within a multi Data-Center approach:

- A Genesys Engage multi Data-Center/Site (i.e. APAC & EMEA) deployment

- Each Workbench Primary at each Data-Center integrates into the respective local Configuration Server

- Each Workbench Primary at each Data-center integrates into the respective local Solution Control Server and associated Message Server

- The Workbench Channel Monitoring feature functions via the WB IO application integrating to the respective Engage SIP Server

- Workbench Users would logically connect into their local Workbench Primary (WB IO application) instance and can visualize the features of WB

- Workbench Users can connect into either their local or remote Data-Center Workbench instances; this provides redundancy

- If the Workbench Agent component is installed on any Genesys Application servers (i.e. SIP, URS, FWK etc)

- the Metric data from those hosts will be sent to the local Workbench node/cluster for storage, providing visualizations via the Workbench Dashboard feature

- For resiliency, Workbench Node 2 contains event data (via Workbench Elasticsearch) and configuration data (via Workbench ZooKeeper)

- For resiliency, Workbench Node 3 contains event data (via Workbench Elasticsearch) and configuration data (via Workbench ZooKeeper)

- Workbench High-Availability (HA) is resiliency of event data (via Workbench Elasticsearch) and configuration data (via Workbench ZooKeeper)

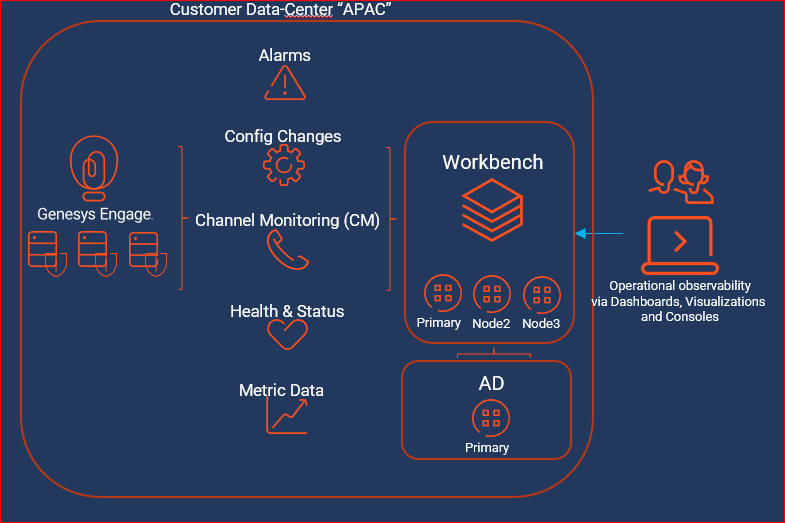

Workbench Anomaly Detection (AD) with a Workbench "Cluster" HA architecture within a single Engage Data-Center

The example architecture below provides the following WB Anomaly Detection (AD) with a WB "Cluster" within a single Data-Center approach:

- A Genesys Engage single Data-Center/Site (i.e. APAC) deployment

- Workbench Primary node integrates into the Engage Master Configuration Server (CS)

- Workbench Primary node integrates into the Engage Solution Control Server (SCS) and associated Message Server (MS)

- The Workbench Channel Monitoring feature functions via the WB IO application integrating to the respective Engage SIP Server

- Workbench Users connect into the Workbench Primary (WB IO application) instance and can visualize the features of WB

- For HA resiliency, Workbench Node 2 contains event data (via Workbench Elasticsearch) and configuration data (via Workbench ZooKeeper)

- For HA resiliency, Workbench Node 3 contains event data (via Workbench Elasticsearch) and configuration data (via Workbench ZooKeeper)

- Workbench Anomaly Detection (AD) Primary Node

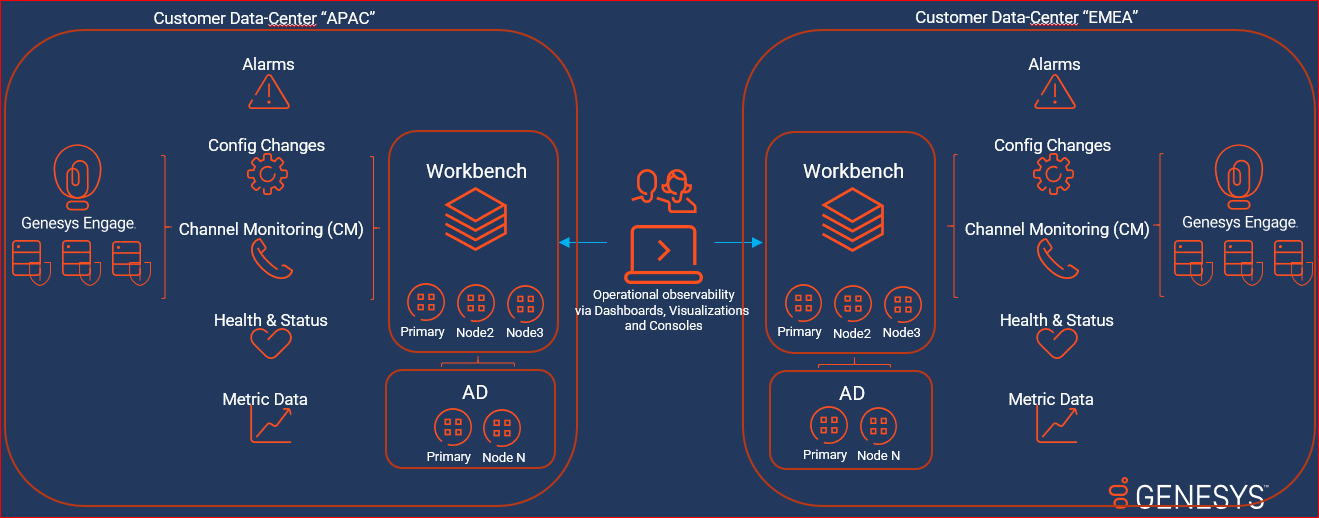

Workbench Anomaly Detection (AD) HA with a Workbench "Cluster" HA architecture within a multi Engage Data-Center

The example architecture below provides the following WB Anomaly Detection (AD) HA with a WB "Cluster" within a multi Data-Center approach:

- A Genesys Engage multi Data-Center/Site (i.e. APAC & EMEA) deployment

- Each Workbench Primary at each Data-Center integrates into the respective local Configuration Server

- Each Workbench Primary at each Data-center integrates into the respective local Solution Control Server and associated Message Server

- The Workbench Channel Monitoring feature functions via the WB IO application integrating to the respective Engage SIP Server

- Workbench Users would logically connect into their local Workbench Primary (WB IO application) instance and can visualize the features of WB

- Workbench Users can connect into either their local or remote Data-Center Workbench instances; this provides redundancy

- If the Workbench Agent component is installed on any Genesys Application servers (i.e. SIP, URS, FWK etc)

- the Metric data from those hosts will be sent to the local Workbench node/cluster for storage, providing visualizations via the Workbench Dashboard feature

- For resiliency, Workbench Node 2 contains event data (via Workbench Elasticsearch) and configuration data (via Workbench ZooKeeper)

- For resiliency, Workbench Node 3 contains event data (via Workbench Elasticsearch) and configuration data (via Workbench ZooKeeper)

- Workbench Anomaly Detection (AD) Primary Node and Node 2 - therefore the AD feature is running in HA mode

Workbench Data-Centers

A Workbench (WB) Data-Center (DC), is a logical concept, containing Workbench components that are typically deployed within the same physical location, typically within the same "Data-Center" or "Site".

For example, a WB distributed solution, could consist of a 3 x Data-Center deployment, Data-Centers "APAC", "EMEA" and "LATAM".

Each WB Data-Center will be running Workbench components, such as:

- Workbench IO

- Workbench Agent

- Workbench Elasticsearch

- Workbench Kibana

- Workbench Logstash

- Workbench ZooKeeper

When installing Workbench, the user has to provide a Data-Center name, post install, the respective Workbench components will be assigned to the Data-Center provided.

Workbench Data-Centers provide:

- logical separation of Workbench components based on physical location

- logical and optimised data ingestion architecture

- i.e. APAC Metric data from the SIP, URS and GVP Servers will be ingested into the APAC Workbench instance/Cluster

- an hoslitic view of multiple Workbench deployments at different Data-Centers, all synchronised to form a Workbench distributed architecture

- i.e. A user can log into the APAC Workbench instance and visualise Alarms, Changes and Channel Monitoring events/data from not only the local APAC WB instance/Cluster, but also the other "EMEA" and "LATAM" Data-Centers Workbench instances

- A Workbench host object cannot be assigned to a different Data-Center

- A Genesys Engage host (i.e. SIP, URS, FWK etc) object can be re-assigned to a different Data-Center

Future Workbench 9.x Architectures/Footprints

- Workbench 9.x future architectures/footprints may change when future roadmap features are released; Workbench 9.x roadmap features are subject to change, timescales TBD.

Workbench Agent and Workbench Agent Remote

- Workbench Agent 8.5 is ONLY for LFMT

- Workbench Agent 9.x is ONLY for Workbench 9.x Hosts

- If/when Workbench and LFMT is deployed, both Workbench Agents 8.5 and 9.x would be needed on each remote host

- The Workbench Agent 8.5 would be required for LFMT to collect log files from the remote hosts (i.e. sip, urs, gvp etc)

- The Workbench Agent 9.x would be required for Workbench ingestion of data from the remote hosts (i.e. sip, urs, gvp etc)

- Workbench Agent Remote (WAR) 9.x is ONLY deployed on remote Genesys Hosts such as SIP, URS, GVP etc - this components sends Metric data to the Workbench 9.x Server/Cluster

Workbench Version Alignment

- Workbench Versions on ALL Nodes and at ALL Data-Centers should be running the same release - i.e. do NOT mix 9.0.000.00 with 9.1.000.00.